Spring AI Multimodality: As AI technologies advance, the ability to process and generate different types of data such as text, images, and audio has become increasingly crucial.

This capability, known as multimodality, allows AI models to understand and produce content across multiple formats, enhancing their versatility and application.

In this article, we’ll explore what multimodality is in Spring AI, discuss its importance, and provide code examples to demonstrate how to implement various multimodal functionalities in your Spring AI projects.

What is Multimodality in Spring AI?

Multimodality in Spring AI refers to the integration of multiple AI models that can process and generate different types of data, such as text, images, and audio, within a single application.

By leveraging multimodal models, developers can create applications that are capable of performing a wide range of tasks, from generating images based on textual descriptions to transcribing audio into text.

For example, a single Spring AI-powered application could generate images from text prompts, convert text to speech, and describe the contents of an image—all within the same system.

This ability to handle various data formats simultaneously opens up new possibilities for creating rich, interactive, and user-friendly AI applications.

Implementing Multimodality in Spring AI

To implement multimodality in Spring AI, you need to integrate different models for processing text, images, and audio.

Below, we’ll cover how to implement four key multimodal functionalities: Text to Image Processing, Image to Text Processing, Text to Audio Processing, and Audio to Text Processing.

Text to Image Processing: Generating Images from Text

The Text to Image Processing feature in Spring AI allows you to generate images from textual descriptions using models like DALL-E 3.

This can be useful in applications where visual content needs to be generated dynamically based on user input.

Set Up Your Spring AI Project

We have already discussed about, how to create spring ai project. You can go through that post for initial project setup.

Implement the Text to Image Model

Create a service that interacts with the DALL-E 3 model to generate images based on text prompts.

package com.example.demo.service;

import org.springframework.ai.image.Image;

import org.springframework.ai.image.ImageOptions;

import org.springframework.ai.image.ImagePrompt;

import org.springframework.ai.openai.OpenAiImageModel;

import org.springframework.ai.openai.OpenAiImageOptions;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

@Service

public class SpringAIService {

@Autowired

OpenAiImageModel aiImageModel;

public Image textToImg(String text) {

ImageOptions imageOptions = OpenAiImageOptions.builder().withQuality("hd").withN(1).withHeight(1024)

.withWidth(1024).build();

ImagePrompt imagePrompt = new ImagePrompt(text, imageOptions);

return aiImageModel.call(imagePrompt).getResult().getOutput();

}

}

Image to Text Processing: Describing Images in Text

The Image to Text Processing feature enables your application to analyze an image and describe its content in text format using models like GPT-4o.

Implement the Image to Text Model

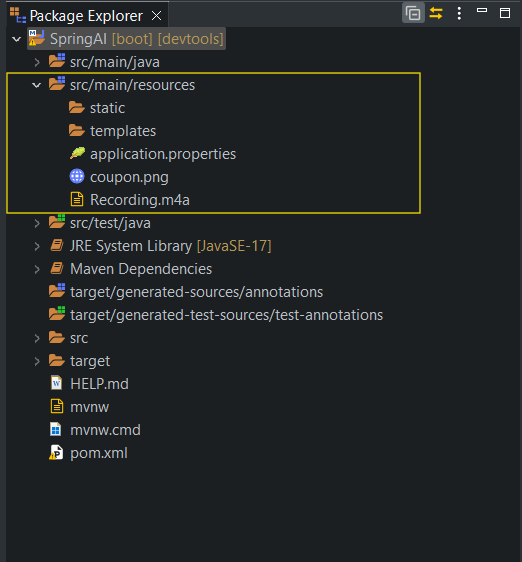

Create a service that processes an image and generates a textual description of it. Upload png file in resources folder.

package com.example.demo.service;

import java.util.List;

import org.springframework.ai.chat.messages.Media;

import org.springframework.ai.chat.messages.UserMessage;

import org.springframework.ai.chat.prompt.Prompt;

import org.springframework.ai.openai.OpenAiChatModel;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.core.io.ClassPathResource;

import org.springframework.core.io.Resource;

import org.springframework.stereotype.Service;

import org.springframework.util.MimeTypeUtils;

@Service

public class SpringAIService {

@Autowired

OpenAiChatModel aiChatModel;

public String imgToText() {

Resource resource = new ClassPathResource("/coupon.png");

var userMessage = new UserMessage("Explain what do you see in this picture?",

List.of(new Media(MimeTypeUtils.IMAGE_PNG, resource)));

return aiChatModel.call(new Prompt(List.of(userMessage))).getResult().getOutput().getContent();

}

}

Text to Audio Processing: Converting Text to Speech

The Text to Audio Processing feature allows you to convert text into spoken audio using models like tts-1.

Implement the Text to Audio Model

Create a service that converts text into audio.

package com.example.demo.service;

import org.springframework.ai.openai.OpenAiAudioSpeechModel;

import org.springframework.ai.openai.OpenAiAudioSpeechOptions;

import org.springframework.ai.openai.OpenAiAudioTranscriptionModel;

import org.springframework.ai.openai.api.OpenAiAudioApi;

import org.springframework.ai.openai.audio.speech.SpeechPrompt;

import org.springframework.ai.openai.audio.speech.SpeechResponse;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

@Service

public class SpringAIService {

@Autowired

OpenAiAudioSpeechModel aiAudioSpeechModel;

@Autowired

OpenAiAudioTranscriptionModel aiAudioTranscriptionModel;

public SpeechResponse textToAudio(String text) {

OpenAiAudioSpeechOptions speechOptions = OpenAiAudioSpeechOptions.builder().withModel("tts-1")

.withVoice(OpenAiAudioApi.SpeechRequest.Voice.ECHO)

.withResponseFormat(OpenAiAudioApi.SpeechRequest.AudioResponseFormat.MP3).withSpeed(1.0f).build();

SpeechPrompt prompt = new SpeechPrompt(text, speechOptions);

return aiAudioSpeechModel.call(prompt);

}

}

Audio to Text Processing: Transcribing Audio to Text

The Audio to Text Processing feature allows you to transcribe spoken audio into text using models like Whisper.

Implement the Audio to Text Model

Create a service that transcribes audio into text. Upload recording audio file in resources folder.

package com.example.demo.service;

import org.springframework.ai.openai.OpenAiAudioTranscriptionModel;

import org.springframework.ai.openai.OpenAiAudioTranscriptionOptions;

import org.springframework.ai.openai.api.OpenAiAudioApi;

import org.springframework.ai.openai.audio.transcription.AudioTranscriptionPrompt;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.core.io.ClassPathResource;

import org.springframework.core.io.Resource;

import org.springframework.stereotype.Service;

@Service

public class SpringAIService {

@Autowired

OpenAiAudioTranscriptionModel aiAudioTranscriptionModel;

public String audioToText() {

Resource resource = new ClassPathResource("/Recording.m4a");

OpenAiAudioTranscriptionOptions transcriptionOptions = OpenAiAudioTranscriptionOptions.builder()

.withLanguage("en").withPrompt("Please explain the audio").withTemperature(0f)

.withResponseFormat(OpenAiAudioApi.TranscriptResponseFormat.TEXT).build();

AudioTranscriptionPrompt audioTranscriptionPrompt = new AudioTranscriptionPrompt(resource,

transcriptionOptions);

return aiAudioTranscriptionModel.call(audioTranscriptionPrompt).getResult().getOutput();

}

}

Expose an API Endpoint

Create a REST controller to expose an endpoint that users can do all these processing.

package com.example.demo.controller;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.core.io.ByteArrayResource;

import org.springframework.http.HttpHeaders;

import org.springframework.http.HttpStatus;

import org.springframework.http.ResponseEntity;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

import com.example.demo.service.SpringAIService;

@RestController

public class SpringAIController {

@Autowired

SpringAIService aiService;

@GetMapping("/text-to-img")

ResponseEntity<Object> getTextToImgResponse(@RequestParam(value = "text") String text) {

return new ResponseEntity<Object>(aiService.textToImg(text), HttpStatus.OK);

}

@GetMapping("/img-to-text")

ResponseEntity<Object> getImgToTextResponse() {

return new ResponseEntity<Object>(aiService.imgToText(), HttpStatus.OK);

}

@GetMapping("/audio-to-text")

ResponseEntity<Object> getAudioToTextResponse() {

return new ResponseEntity<Object>(aiService.audioToText(), HttpStatus.OK);

}

@GetMapping("/text-to-audio")

ResponseEntity<Object> getTextToAudioResponse(@RequestParam(value = "text") String text) {

byte[] audio = aiService.textToAudio(text).getResult().getOutput();

ByteArrayResource arrayResource = new ByteArrayResource(audio);

return ResponseEntity.ok().header(HttpHeaders.CONTENT_DISPOSITION, "attachment; filename=textToAudioFile.mp3")

.contentType(org.springframework.http.MediaType.APPLICATION_OCTET_STREAM).contentLength(audio.length)

.body(arrayResource);

}

}

Test Your Multimodality output

Start your Spring Boot application and test all models response by calling the API endpoint using Postman or any other API testing tool.

- Test the API:

- Open Postman and create a new GET request with the URL:

http://localhost:8080/text-to-img?text=A+sunset+over+the+mountains

- Description: This call generates an image based on the text description provided in the

textquery parameter.

http://localhost:8080/img-to-text

- Description: This call retrieves a textual description of the uploaded image.

http://localhost:8080/audio-to-text

- Description: This call transcribes audio into text.

http://localhost:8080/text-to-audio?text=Hello,+world!

- Description: This call converts the provided text into an audio file. The response will be an audio file download.

Conclusion

Multimodality in Spring AI allows developers to create powerful, versatile applications that can process and generate multiple types of data.

By integrating models like DALL-E 3 for text-to-image generation, GPT-4o for image-to-text descriptions, tts-1 for text-to-audio conversion, and Whisper for audio transcription, you can build rich, interactive experiences for users.

The examples provided in this guide give you the tools to start implementing multimodal functionalities in your own Spring AI projects.

As you explore these capabilities, you’ll find even more opportunities to innovate and enhance your AI-driven applications.